^-^

2024. 8. 19. 16:10ㆍ카테고리 없음

- 만들어진 GPU 환경에서 허깅페이스 Pipeline 으로 여러가지 LLM 사용해보기

- 예시1 : LG AI 리서치 모델 : https://huggingface.co/LGAI-EXAONE/EXAONE-3.0-7.8B-Instruct

LGAI-EXAONE/EXAONE-3.0-7.8B-Instruct · Hugging Face

EXAONE-3.0-7.8B-Instruct 👋👋 We have revised our license for revitalizing the research ecosystem.👋👋 Introduction We introduce EXAONE-3.0-7.8B-Instruct, a pre-trained and instruction-tuned bilingual (English and Korean) generative model with 7.8

huggingface.co

- 예시2 : 업스테이지 솔라 : https://huggingface.co/upstage/SOLAR-10.7B-v1.0

- 예시3 : 준범님 kollama : https://huggingface.co/beomi/llama-2-ko-7b

beomi/llama-2-ko-7b · Hugging Face

Llama-2 ['▁', '안', '<0xEB>', '<0x85>', '<0x95>', '하', '세', '요', ',', '▁', '오', '<0xEB>', '<0x8A>', '<0x98>', '은', '▁', '<0xEB>', '<0x82>', '<0xA0>', '씨', '가', '▁', '<0xEC>', '<0xA2>', '<0x8B>', '<0xEB>', '<0x84>', '<0xA4>', '요']

huggingface.co

v100으로 했음

## GCP VM cuda install

sudo apt-get update

# 이후 재부팅

sudo apt install nvidia-driver-470 libnvidia-gl-470 libnvidia-compute-470 libnvidia-decode-470 libnvidia-encode-470 libnvidia-ifr1-470 libnvidia-fbc1-470

sudo reboot

# curl ,git ,vim 설치

sudo apt-get update

sudo apt-get install curl , git , vim

#pyenv 설치 전 종속성 해결

apt-get install -y make build-essential libssl-dev zlib1g-dev libbz2-dev libreadline-dev libsqlite3-dev wget llvm libncurses5-dev libncursesw5-dev xz-utils tk-dev libffi-dev liblzma-dev

# pyenv 설치

curl https://pyenv.run | bash

# ~/.bashrc (linux), ~/.bash_profile (mac) (bash) 혹은 ~/.zshrc (zsh) 에 pyenv 환경 변수 추가

vi ~/.bashrc (~/.bash_profile, ~/.zshrc)

# ~/.bashrc 파일 가장 아래 쪽에 아래의 명령어 추가

# shift+g 를 입력하면 파일의 가장 아래쪽으로 이동합니다.

export PATH="~/.pyenv/bin:$PATH"

eval "$(pyenv init --path)"

eval "$(pyenv init -)"

eval "$(pyenv virtualenv-init -)"

# 위의 사항을 ~/.bashrc (bash) 혹은 ~/.zshrc ㅣ(zsh) 에 입력하고 해당 사항을 저장합니다.

source ~/.bashrc (source ~/.zshrc)

# 위 source 가 안될 시, shell 재실행

exec $SHELL

#pip installer 설치

sudo apt install python3-pip

# 설치 할 수 있는 버전 확인

pyenv install --list | <PYTHON_VERSION>

# e.g. pyenv install --list | 3.11

# 메이저 버전 중 가장 최신 버전을 설치합니다.

pyenv install <PYTHON_VERSION>

# e.g. pyenv install 3.10.x

from transformers import pipeline, AutoModelForCausalLM, AutoTokenizer

import torch

# 모델과 토크나이저 로드

model_name = "upstage/SOLAR-10.7B-v1.0"

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# GPU 사용 설정

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

# 파이프라인 생성

solar_pipeline = pipeline('text-generation', model=model, tokenizer=tokenizer, device=0 if torch.cuda.is_available() else -1)

# 예시 입력에 대해 텍스트 생성

input_text = "Explain the impact of climate change on global economies."

generated_text = solar_pipeline(input_text, max_length=100, num_return_sequences=1)

print(generated_text[0]['generated_text'])

오류 떨어짐

(envtest) kuba24llm@instance-20240818-211554:~$ python check.py

config.json: 100%|█████████████████████████████████████████████████| 658/658 [00:00<00:00, 4.31MB/s]

model.safetensors.index.json: 100%|█████████████████████████████| 35.8k/35.8k [00:00<00:00, 107MB/s]

model-00001-of-00005.safetensors: 100%|████████████████████████| 4.94G/4.94G [02:47<00:00, 29.5MB/s]

model-00002-of-00005.safetensors: 100%|████████████████████████| 5.00G/5.00G [02:46<00:00, 30.1MB/s]

model-00003-of-00005.safetensors: 100%|████████████████████████| 4.92G/4.92G [02:42<00:00, 30.2MB/s]

model-00004-of-00005.safetensors: 100%|████████████████████████| 4.92G/4.92G [02:44<00:00, 29.8MB/s]

model-00005-of-00005.safetensors: 100%|████████████████████████| 1.69G/1.69G [00:57<00:00, 29.3MB/s]

Downloading shards: 100%|████████████████████████████████████████████| 5/5 [12:01<00:00, 144.27s/it]

Loading checkpoint shards: 0%| | 0/5 [00:00<?, ?it/s]

Traceback (most recent call last):

File "/home/kuba24llm/check.py", line 6, in <module>

model = AutoModelForCausalLM.from_pretrained(model_name)

File "/home/kuba24llm/.pyenv/versions/envtest/lib/python3.10/site-packages/transformers/models/auto/auto_factory.py", line 564, in from_pretrained

return model_class.from_pretrained(

File "/home/kuba24llm/.pyenv/versions/envtest/lib/python3.10/site-packages/transformers/modeling_utils.py", line 3941, in from_pretrained

) = cls._load_pretrained_model(

File "/home/kuba24llm/.pyenv/versions/envtest/lib/python3.10/site-packages/transformers/modeling_utils.py", line 4395, in _load_pretrained_model

state_dict = load_state_dict(shard_file, is_quantized=is_quantized)

File "/home/kuba24llm/.pyenv/versions/envtest/lib/python3.10/site-packages/transformers/modeling_utils.py", line 549, in load_state_dict

with safe_open(checkpoint_file, framework="pt") as f:

RuntimeError: unable to mmap 4943162240 bytes from file </home/kuba24llm/.cache/huggingface/hub/models--upstage--SOLAR-10.7B-v1.0/snapshots/a45090b8e56bdc2b8e32e46b3cd782fc0bea1fa5/model-00001-of-00005.safetensors>: Cannot allocate memory (12)

자꾸 killed

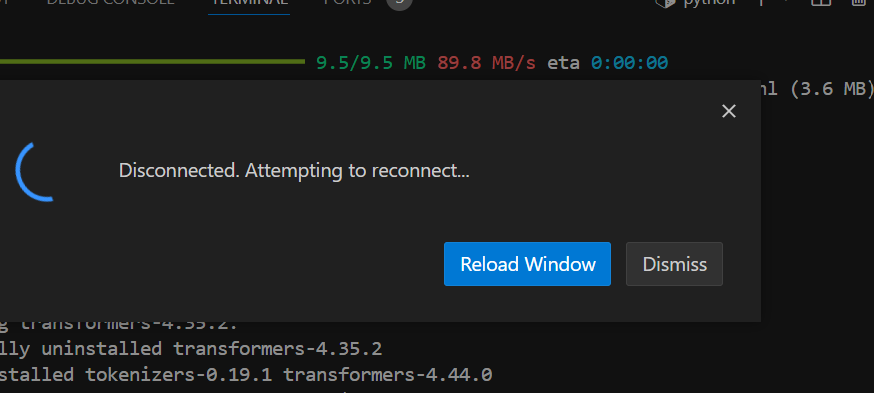

whygrano reconnecting